In continuation with yesterday’s post, I want to get a sense of the reading level of Reddit.

The Jupyter Notebook for this little project is found here. After considering the layout of yesterday’s post, I prefer the minimalistic style whereby I mention the highlights of my analysis. If you want to see the code, you can find it in the Jupyter Notebook.

Upgrading the Flesch Reading Ease function

There were a number of problems with the flesch_reading_ease() function defined yesterday. For one, the code would break if it encountered a word that did not exist. I therefore modified the function by adding the following:

- Made it so that words not in the dictionary are skipped so that our program does not break

- Ensure there is no division by 0, returning

Nonetype instead. (Note: this is integrated withcount_syllables()function) - Add

show_detailsargument to show the number of sentences, words, syllables, and unknown words making up the Flesch Reading Ease score for debugging

def flesch_reading_ease(text, show_details = False):

## Preprocessing

text = text.lower()

sentences = nltk.tokenize.sent_tokenize(text)

words = nltk.wordpunct_tokenize(re.sub('[^a-zA-Z_ ]', '',text))

syllables = [count_syllables(word) for word in words]

## Count sentences, words, and syllables

## Skip words that do not exist in dictionary

num_sentences = len(sentences)

num_unknown_words = syllables.count(-1)

num_words = len(words) - num_unknown_words

num_syllables = sum([s for s in syllables if s > 0])

## Calculate

if (num_sentences == 0 or num_words == 0): return None

fre = readability_ease(num_sentences, num_words, num_syllables)

if show_details:

return {

"sentence" : num_sentences,

"word" : num_words,

"syllables" : num_syllables,

"unknown": num_unknown_words

}

return(fre)

Same thread, different question

In Day03, we access Reddit comments from an API to do sentiment analysis. Let’s now find the Flesch Reading Ease (FRE) of the Men of Reddit, what’s the biggest “I’m a princess” red flag? (thewhackcat, 2017) thread.

We do so by calculating the FRE score for each comment and then taking the average of all scores. As a result, we find the comments are at a 7th grade level (FRE = 71.9) which means the comments are “Fairly easy to read”.

More threads

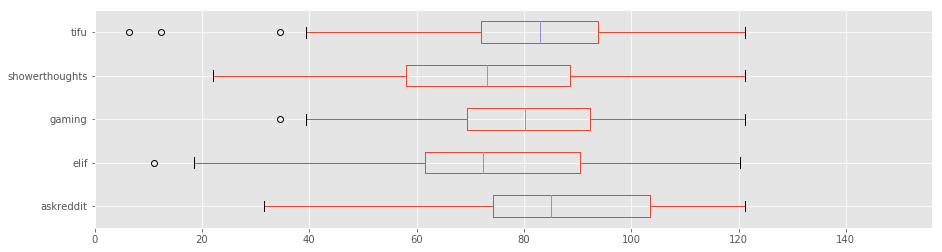

What about other threads? I selected a thread from a few popular subreddits:

- Ask reddit | What’s your favorite insult that does not contain a curse word?

- Explain like I’m five | ELI5: What would happen if you take a compass into space?

- Gaming | When the game knows shit is about to go down

- Shower thoughts | College would be a lot more affordable if they stopped requiring courses that have nothing to do with people’s major.

- TIFU | TIFU by being courteous

## Parse reddit comments and calculate FRE for 75 random comments

## Note: the number of scores is equal across categories

df = {}

for k in urls.keys():

data = parse_reddit(reddit, urls[k])

fres = [flesch_reading_ease(text) for text in data]

fres = [fre for fre in fres if fre != None]

df[k] = random.sample(k=75, population=fres)

df = pd.DataFrame(df)

0-30 30-50 50-60 60-70 70-80 80-90 90+

College grad College 10-12th 8-9th 7th 6th 5th

From the boxplot, we find:

- People’s shower thoughts are relatively comprehensive

- ELIF, which stands for “explain like I’m five”, would not be understood by 5 year olds

- Asking Reddit is easily understood by a younger audience