Today I want to implement the other algorithm for measuring feature importance, connection weights.

Ibrahim, OM. 2013. A comparison of methods for assessing the relative importance of input variables in artificial neural networks. Journal of Applied Sciences Research, 9(11): 5692-5700.

The Jupyter Notebook for this little project is found here.

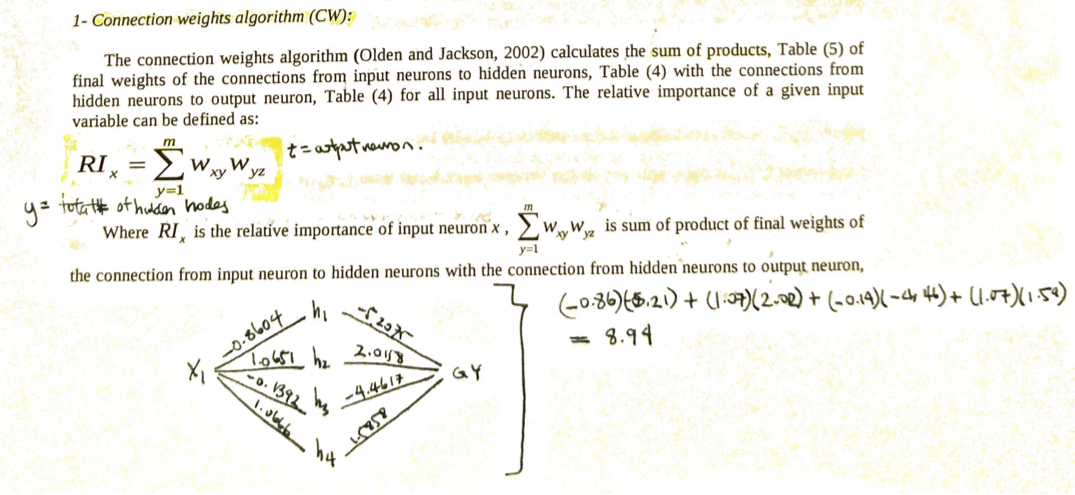

Connection weights

Connection weights calculate the sum of products from the input to the output.

Implementing the Connection Weights Algorithm (CW)

def connection_weights(A, B):

"""

Computes Connection weights algorithm

A = matrix of weights of input-hidden layer (rows=input & cols=hidden)

B = matrix of weights of hidden-output layer (rows=hidden & cols=output)

"""

cw = np.dot(A, B)

# normalize to 100% for relative importance

ri = cw / cw.sum()

return(ri)

Visualizing variable importance by CW

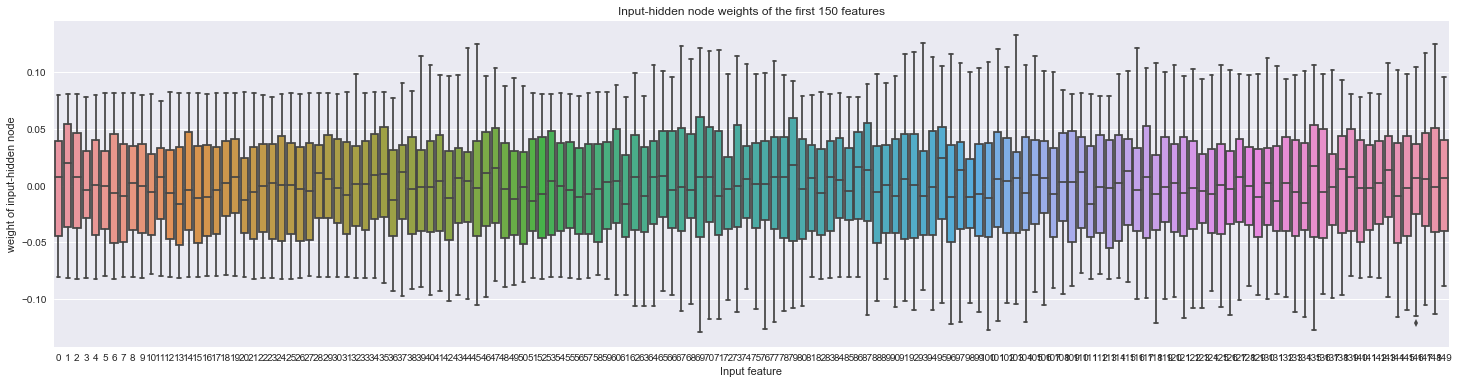

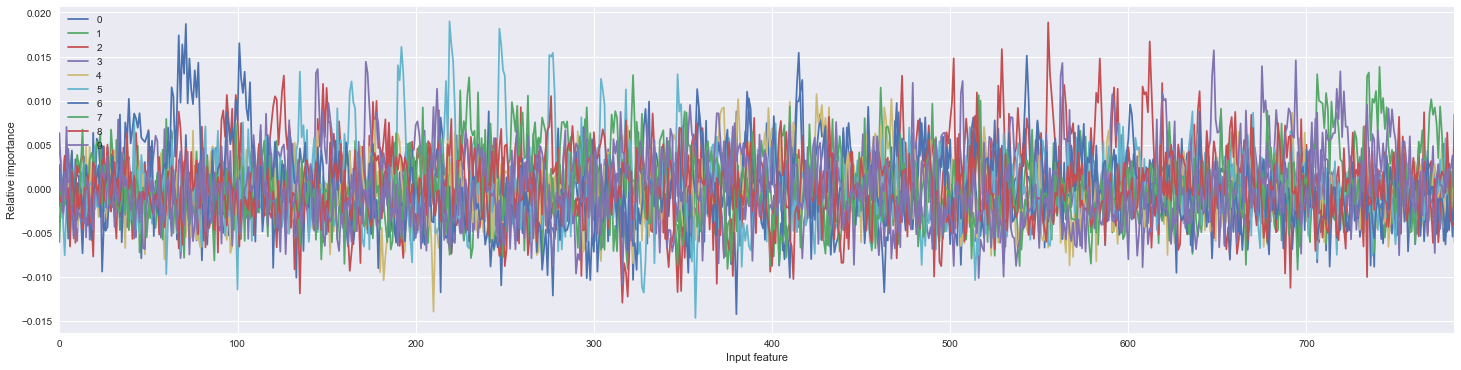

Similar to Garson’s algorithm, the weight of input features across the hidden nodes are variable for some features than others (BOX PLOT); but unlike Garson’s algorithm, the feature importance for the different output classes are not the same (LINE PLOT).

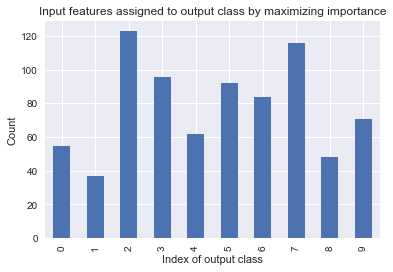

Partitioning the input features to the class whose feature importance is the greatest, we find the features not uniformly distributed across the index of the output classes (BAR PLOT).