Similar to Bagging is Random Forest.

Bagging

When samples are drawn with replacement (i.e., a bootstrap sample)Random Forest

When sample are drawn with replacement AND a random subset of candidate features is used

The Jupyter Notebook for this little project is found here.

Random Forest

from sklearn.ensemble import RandomForestRegressor

rf = RandomForestRegressor()

rf = rf.fit(X_train, y_train)

In Python, Random Forest is specified by RandomForestClassifier (for classification) and RandomForestRegressor (for regression).

Extremely Randomized trees

In Extremely Randomized trees, the splitting threshold of features is randomized (on top of Random Forest) to further reduce the variance of the model. In other words:

Extremely Randomized trees

When sample are drawn with replacement AND a random subset of candidate features is used AND the best of the randomly-generated thresholds is picked as the splitting rule

from sklearn.ensemble import ExtraTreesRegressor

etr = ExtraTreesRegressor()

etr = etr.fit(X_train, y_train)

For more information, see Scikit-learn’s “Extremely Randomized Trees”.

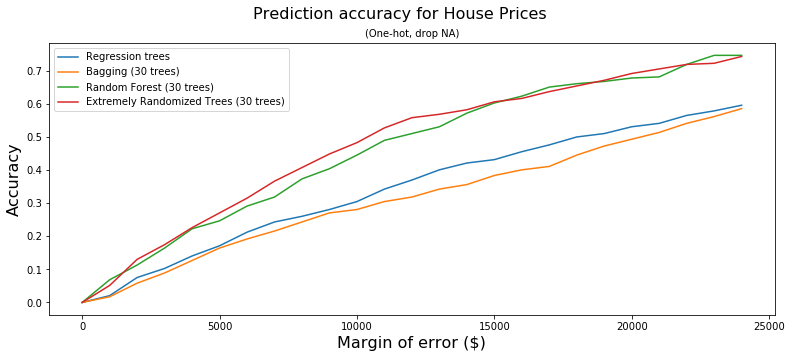

Tree comparison

Using the same Kaggle House Prices for Advanced Regression Techniques dataset as described on Day93, we found that the models which use random subsets of features gave the most accurate predictions.

Note: Regression Tee was described on Day93, Bagging on Day94, and Random Forest & Extremely Randomized Tree on today.