I saw this tweet today and wanted to see how deep learning compares with the decision trees in predicting Kaggle House Prices.

Don't use deep learning your data isn't that big https://t.co/q9LZkUyoR5 pic.twitter.com/pi25EvZ8Tb

— Simply Statistics (@simplystats) May 31, 2017

In this tweet, “Don’t use deep learning your data isn’t that big”, SimplyStats (2017) says:

“When your dataset isn’t that big, doing something simpler is often both more interpretable and it works just as well due to potential overfitting.”

“For low training set sample sizes it looks like the simpler method (just picking the top 10 and using a linear model) slightly outperforms the more complicated methods. As the sample size increases, we see the more complicated method catch up and have comparable test set accuracy.”

The Jupyter Notebook for this little project is found here.

Non-deep learning

In my previous posts, I predicted house prices using various decision trees with comparable accuracy.

| Approach | Day | Accuracy when “Margin of Error” is $5000 |

|---|---|---|

| Regression Tree | Day93 | ~0.15 |

| Bagging | Day94 | ~0.15 |

| Random Forest & Extremely Randomized Tree | Day95 | ~0.25 |

| Boosting | Day96 | ~0.11 |

Note: The models have not been optimized.

Deep learning

Using deep learning, specified in Scikit-learn by MLPRegressor (for regression) or MLPClassifier (for classification), we find:

from sklearn.neural_network import MLPRegressor

mlp = MLPRegressor(alpha=0.001, max_iter=1000,

activation="relu", solver="lbfgs",

random_state=1)

mlp = mlp.fit(X_train, y_train)

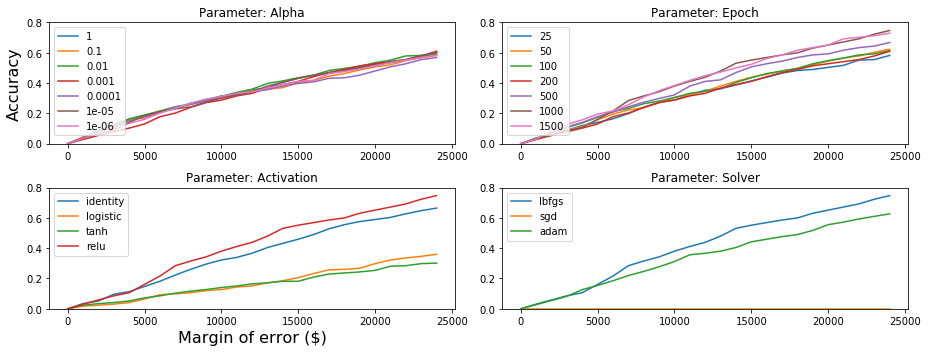

There are many parameter to tune, here we tuned 4 parameters.

(see the Jupyter Notebook for run settings)

- Indeed predictions between the deep and non-deep methods are comparable

- changing the

alphavalues does not change the accuracy much - 1000+

epochs seem to give steady accuracies - “relu” and “identity”

activationdoes comparably better than “tanh” and “logistic” - “sgd”

solverdoes comparably bad