The Jupyter Notebook for this little project is found here.

Yesterday we explored the Kaggle HR dataset to answer a few dashboard type questions. Moving beyond the scope of a data analyst and into the scope of a data scientist, I asked the following:

Given the following features, are we able to predict a person’s salary and are we able to identify features that are more informative with regards to predicting a person’s salary?

satisfaction_level

last_evaluation

number_project

average_montly_hours

time_spend_company

work_accident

left_workplace

promotion_last_5years

Approach

- Split the dataset into train (80%) and test (20%) sets

- Train a random forest classifier

- Evaluate model prediction accuracy

- Identify important features

Random Forest Classifier

When we train the classifier, we get an accuracy of 96.4%.

# Split the data set: train on 80% and test on 20%

n = len(X)

n_80 = int(n * .8)

X_train = X[:n_80]

Y_train = Y[:n_80]

X_test = X[n_80:]

Y_test = Y[n_80:]

# Train classifier

from sklearn.ensemble import RandomForestClassifier

rfc = RandomForestClassifier()

rfc.fit(X_train, Y_train)

# Evaluate accuracy

sum(rfc.predict(X_test) == Y_test)/len(X_test)

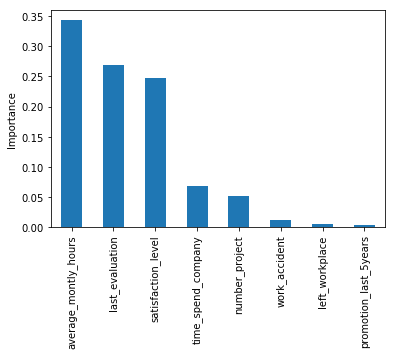

Important features

We find the most important features in predicting salary are (1) average_montly_hours, (2) last_evaluation, and (3) satisfaction_level.

Prediction accuracy with vs without important features

We next asked how would the prediction accuracy change if we predict only on the important features vs only on the non-important features.

When we consider only the important features, prediction accuracy was 95.0%. (This is comparable to the 96.4% accuracy trained on all features.) Without those features, the accuracy dropped to 57.2%.