Browsing through the r/datasets subreddit for project ideas, I was inspired to start a new project . The goal will to analyze Kickstarter data.

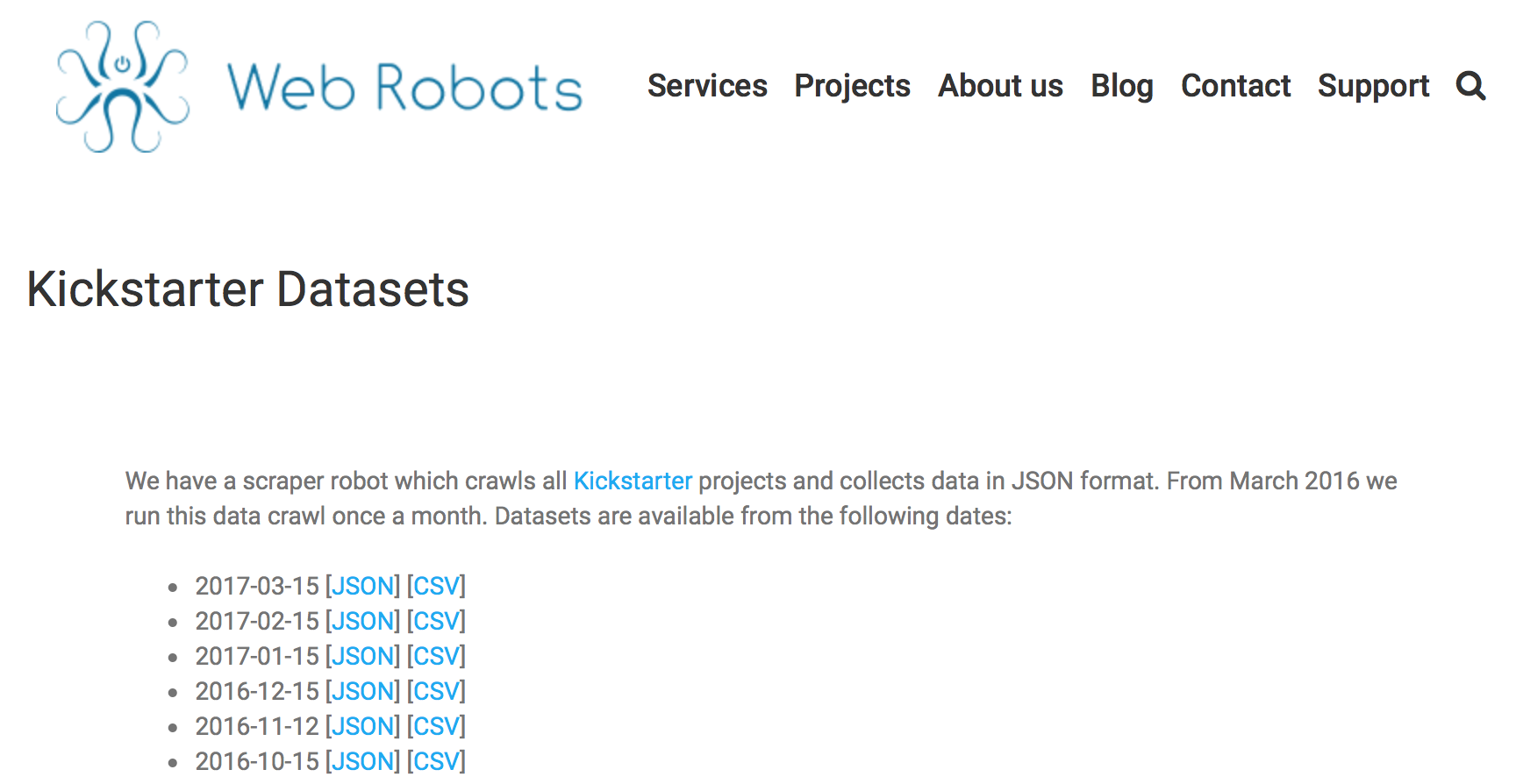

Web Robots

Before any analysis can be done, I need data. Web Robots is a service which does web scraping and crawling. They provide some free data and projects, and the Kickstarter datasets is one of them.

To download the data, one needs to click on the JSON or CSV download link.

I didn’t want to do this manually.

Scraping for the date and url

I used Python’s BeautifulSoup to obtain the date and url of the latest dataset version.

from bs4 import BeautifulSoup

import requests

the_url = "https://webrobots.io/kickstarter-datasets"

r = requests.get(the_url)

soup = BeautifulSoup(r.text, "html5lib")

latest = soup.body.find("div", {"id": "main"}).find("li")

latest_date = latest.text.split(' ', 1)[0]

latest_json, latest_csv = [v["href"] for v in latest.find_all("a")]

Downloading the zip data

I then downloaded the CSV files using the url.

import requests, zipfile, io

import os

zip_file_url = latest_csv

r = requests.get(zip_file_url)

z = zipfile.ZipFile(io.BytesIO(r.content))

z.extractall()

I also create an empty file to indicate the version of the downloaded data.

download_version = os.path.join(download_dir, "_version."+latest_date)

# Tag the downloaded version

open(download_version, 'a').close()

Putting it altogether

The data will be updated periodically; but in the meantime, the data won’t change. I therefore want my download algorithm to be smart enough not to download the data when the dataset versions are the same:

- If files have not been downloaded, then download the files

- If the latest version is newer than the downloaded files, then download the new version else do nothing

Overall, I created this script to download the data from Web Robots.