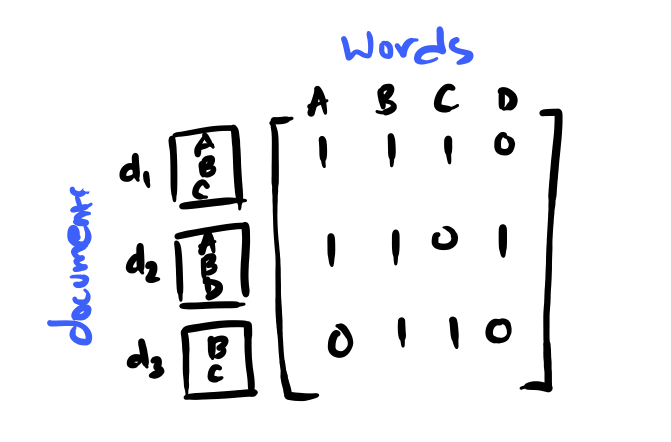

Continuing with the text analysis, I next apply the preprocessing of a document to all documents in my corpus for getting the document-word count matrix.

The Jupyter Notebook for this little project is found here. I also created a Python module containing yesterday’s code here.

Custom python module

Defining code in modules allow users to share and reuse code. For instance, DatabaseKick is a class object which allows me to easily connect and disconnect to my Kickstarter database.

dk = DatabaseKick()

dk.connect()

dk.disconnect()

One thing to keep in mind is that to be able to load the module, the module needs to either be in the PYTHONPATH environment variable or the path of the module needs to be added to the new Python script.

# Appending path of module

import sys

sys.path.append("../python/")

Decorator functions

Before I can create my count matrix (next section), I need to modify the output of the text_processing function. Instead of a document producing a list of words, I need (as output) a string object for each document (ie. join the list of words by whitespaces to produce a string).

One way to modify functions in python is to use decorator functions. The input and output of a decorator function is a function. Typically, this function is modified. Ayman Farhat (2014) has a nice guide to Python’s function decorators.

# Original function

text_processing = preprocess.text_processing

# Decorator function

# which joins the output of func by whitespace

def join_output(func):

def func_wrapper(text, *arg, **karg):

return ' '.join(func(text, *arg, **karg))

return func_wrapper

# Decorate function

text_processing = join_output(text_processing)

In the example, after being decorated by join_output, text_processing now outputs a string.

# Preprocess documents

list_of_documents = df['document'].apply(lambda x: text_processing(x, method="stem"))

This step took 9.5 minutes!

Creating the document-word count matrix

To convert a collection of text documents to a matrix of token counts, the sklearn.feature_extraction.text.CountVectorizer function is used. This function takes as input a list of documents (ie. a list of strings) and produces a document-word count matrix of type scipy.sparse.csr.csr_matrix.

from sklearn.feature_extraction.text import CountVectorizer

count_vect = CountVectorizer()

mat = count_vect.fit_transform(list_of_documents)

# Print array

mat.toarray()

The matrix was then saved with the save_sparse_csr function (and loaded with the load_sparse_csr function) defined by Dennis Golomazov (2017) in Save / load scipy sparse csr_matrix in portable data format.

I save intermediates because I don’t want to be rerun this data processing over and over again.